Publications

Asterisks (“*”) indicate equal contribution.

Please refer to my Google scholar page for the full list.

|

How LLMs Comprehend Temporal Meaning in Narratives: A Case Study in Cognitive Evaluation of LLMsKarin De Langis, Jong Inn Park, Andreas Schramm, Bin Hu, Khanh Chi Le, Dongyeop Kang ACL Main, 2025 Assesses LLM comprehension of narrative temporal aspect with an expert-in-the-loop probe pipeline, revealing over-reliance on prototypicality, inconsistent aspect judgments, and weak causal reasoning, and introducing a standardized framework for evaluating cognitive–linguistic capabilities. |

|

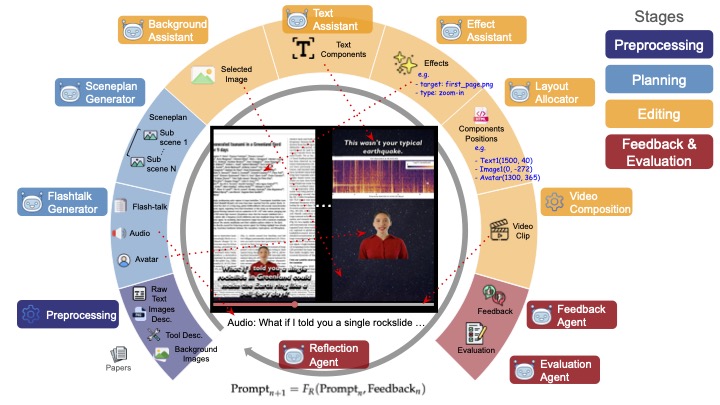

Stealing Creator's Workflow: A Creator-Inspired Agentic Framework with Iterative Feedback Loop for Improved Scientific Short-form GenerationJong Inn Park, Maanas Taneja, Qianwen Wang, Dongyeop Kang arXiv, 2025 arXiv / project page / Proposes SciTalk, a creator-inspired multi-LLM pipeline that grounds short-form science videos in text, figures, and style cues, using specialized agents and iterative feedback to surpass one-shot prompting in factual accuracy and engagement. |

|

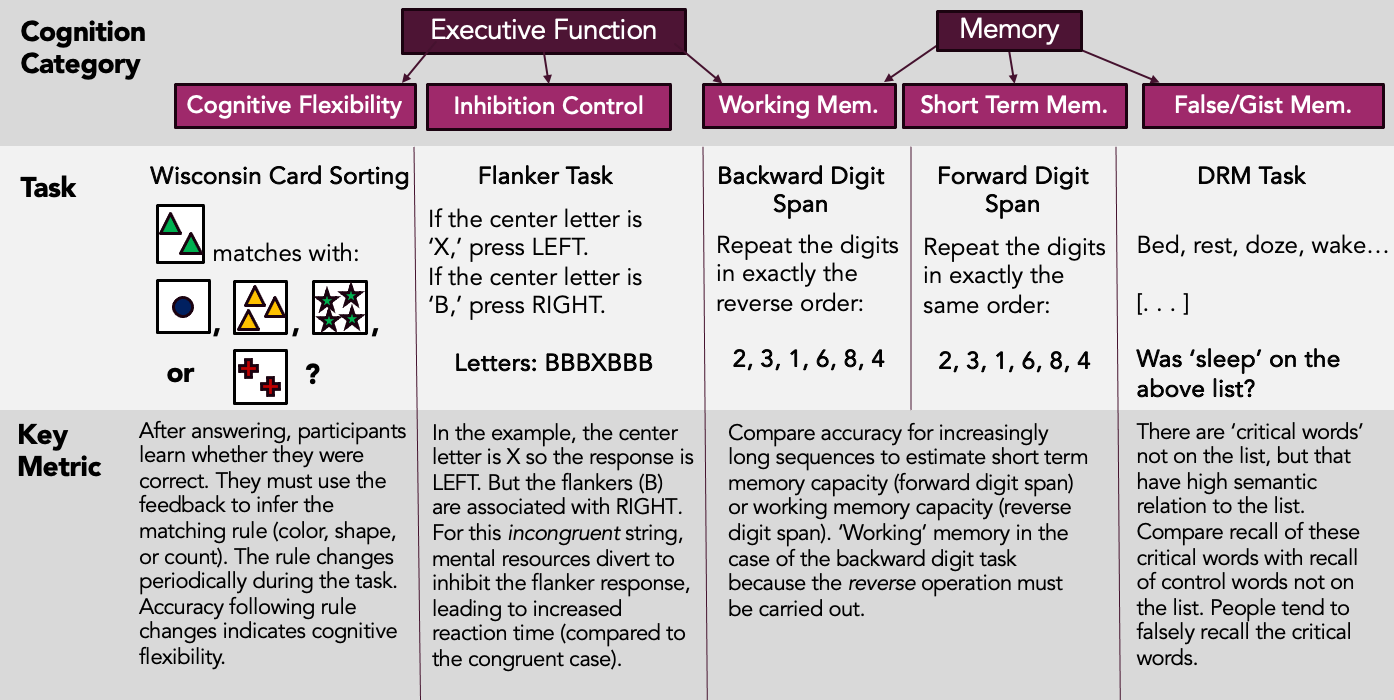

A Framework for Robust Cognitive Evaluation of LLMsKarin de Langis, Jong Inn Park, Bin Hu, Khanh Chi Le, Andreas Schramm, Michael C Mensink, Andrew Elfenbein, Dongyeop Kang arXiv, 2025 arXiv / Introduces CognitivEval, a robust evaluation framework that uses automatic prompt permutations and dual generation-probability testing to probe LLM cognition, replicating five classic cognitive-science experiments and profiling state-of-the-art models for standardized, reproducible research. |

|

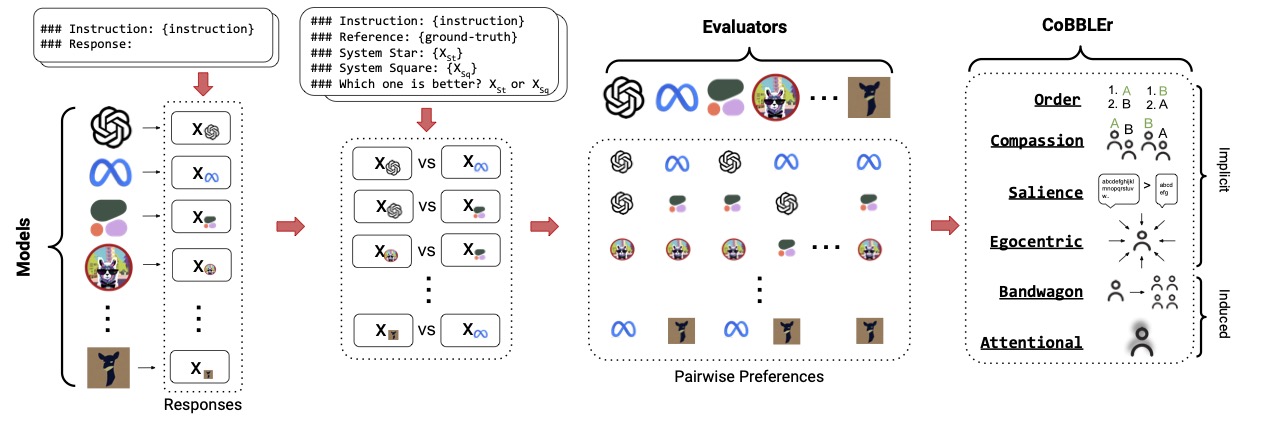

Benchmarking Cognitive Biases in Large Language Models as EvaluatorsRyan Koo, Minhwa Lee, Vipul Raheja, Jong Inn Park, Zae Myung Kim, Dongyeop Kang Findings of ACL, 2024 arXiv / project page / code / data / Evaluated 16 large language models (LLMs) as automatic evaluators using preference ranking and introduced the Cognitive Bias Benchmark for LLMs as Evaluators (COBBLER), revealing significant cognitive biases and misalignment with human preferences, indicating limitations in using LLMs for automatic annotation. |

|

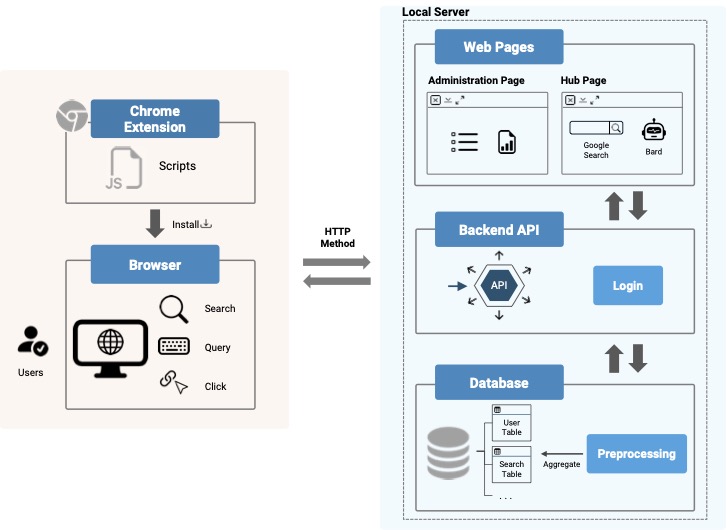

Consumer Engagement With AI-Powered Search Engines and Implications for the Future of Search AdvertisingGabriel Garlough-Shah, Jong Inn Park, Shirley Anugrah Hayati, Dongyeop Kang, Jisu Huh Advertising Division of AEJMC, 2024 Explored consumers’ choice, motivations, and use behavior between AI-powered search engines (AIPSEs) and traditional search engines (TSEs), highlighting differences in motivational use and behavior on each by deploying a Chrome Extension to users. |

|

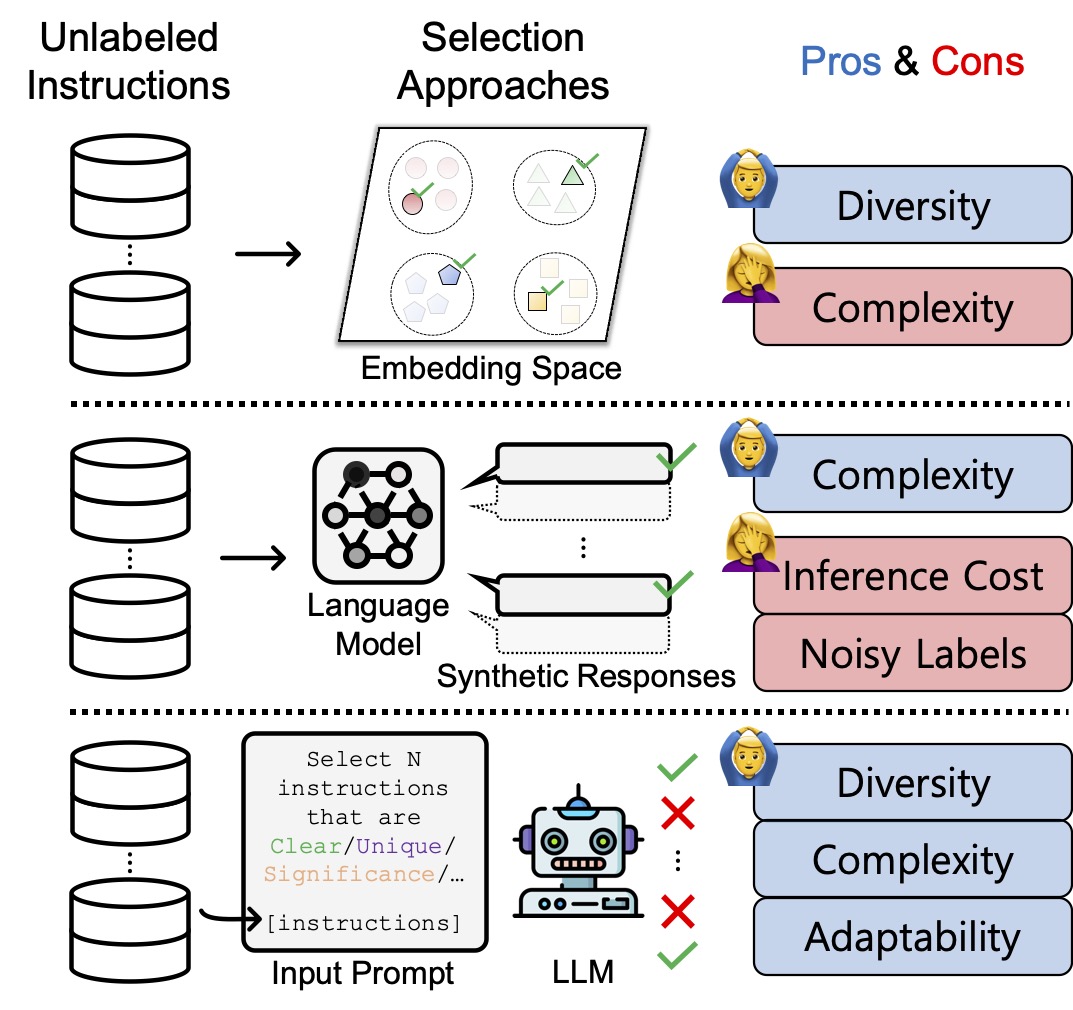

SelectLLM: Can LLMs Select Important Instructions to Annotate?Ritik Sachin Parkar*, Jaehyung Kim*, Jong Inn Park, Dongyeop Kang arXiv, 2024 arXiv / code / Developed SelectLLM, a framework utilizing coreset-based clustering and large language models to enhance the selection of unlabeled instructions for improved instruction tuning performance. |

|

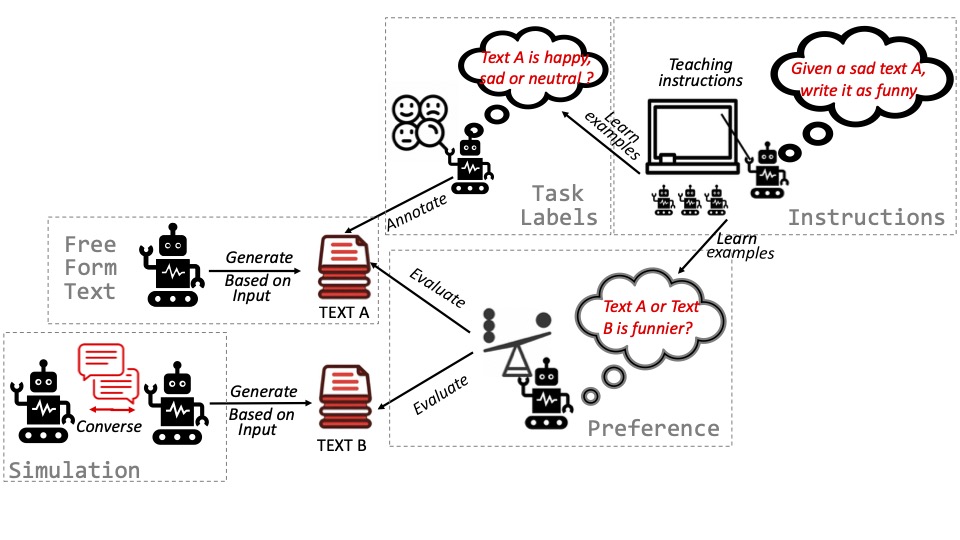

Under the surface: Tracking the artifactuality of llm-generated dataD. Das*, K.D. Langis*, A. Martin*, J. Kim*, M. Lee*, Z.M. Kim*, S. Hayati, R. Owan, B. Hu, R. Parkar, R. Koo, J.I. Park, A. Tyagi, L. Ferland, S. Roy, V. Liu, D. Kang arXiv, 2024 arXiv / project page / code / data / Explored the expanding role of large language models (LLMs) in generating artificial data, analyzing various types of LLM-generated text and their implications, revealing significant disparities compared to human data, especially in complex tasks, and emphasizing the need for ethical practices in data creation and addressing biases. |